Memory management and safety in Bismuth VM

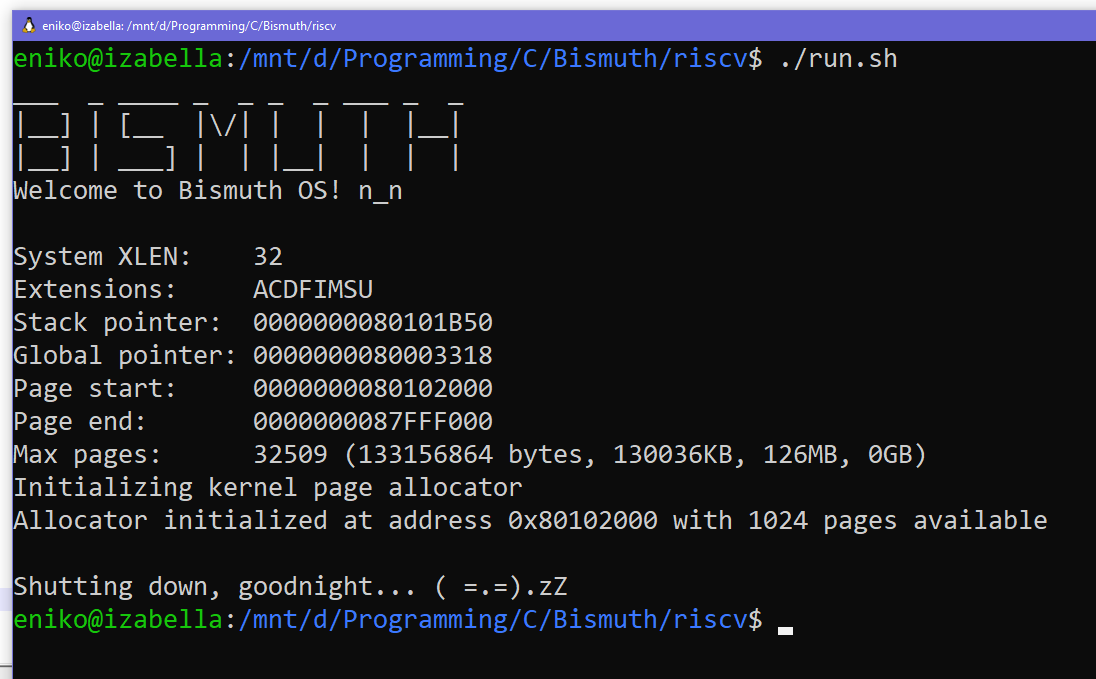

This is the second in a series of posts about a virtual machine I’m developing as a hobby project called Bismuth. In this edition we’re going to look at the VM’s design for memory management and safety. To start with I’ll remind you of the design goals for this VM as detailed in my last post, with those that apply here in bold:

- Must be fast

- The IR must be compatible with standard C

- Can run in a browser

- The VM must be easy to implement

Not to give away the twist, but when you combine points 2 and 4 with a VM that cares about memory safety (i.e. programs should not be able to do things like read outside of the bounds of an allocated region of memory) things can get a little bit complicated. So let’s walk through the stages of grief that I experienced and the solutions I came to during the bargaining stage when designing the memory management and safety features of the Bismuth VM.

Simple is complicated

Point 4 says the VM must be easy to implement. In my experience this means the VM must be relatively simple, and as I’m sure we can all agree* the simplest language is C. Why? Because C is basically fancy machine code. Everything’s a number. Can’t get simpler than that. Sometimes the number points to a region of memory where other numbers live. It’s numbers all the way down, and I like that about C. So if we want a VM that’s simple, well, it should be like C, right? Especially since one of the design goals is to have an IR that’s compatible with standard C.

* I’m sure plenty of people will disagree with this, actually.

So that’s easy! When the IR wants to allocate memory, we just put in a call to malloc (or calloc if you’re feeling fancy) and shove the pointer onto the stack. There’s a few problems with this approach, including that on 64-bit systems the pointer would be 64-bit and the VM is a 32-bit machine, but we’ll start with the more obvious one.

You see, C has a problem. If everything is a number, and an array is just some arbitrary region in memory full of other numbers with a number pointing to it, then there’s no reason you can’t just read arbitrarily from anywhere in memory. If you want to look at a number that’s before the start of or after the end of an array, you can totally do that. Nobody’s going to stop you! Well, until your program segfaults anyhow.

These are also called buffer over or underruns, and the Rustaceans tell me those are like, bad? Something about CVEs? I don’t know, I’m just a game developer, we don’t care** about that sort of thing.

** This is a joke, please put down the pitchfork. Although I suppose there was the time Untitled Goose Game had arbitrary code execution in its save system.

So we probably want some kind of sandboxing behavior. After all, accessing arbitrary memory addresses is bad because it can cause the VM to segfault (and a VM should probably never do that) but it’s also bad because we don’t want user programs to be able to poke around in the memory used by the VM itself, because that’s a security risk.

We need some other strategy for separating what memory belongs to a program (and which memory belongs to which program if we can run multiple simultaneously) and what memory belongs to the VM. That means we can’t use malloc and its ilk. Right?

Nobody wants to write a heap allocator

Let me be clear: I’ve written malloc-style heap allocators, composable memory allocators, and a variety of other memory allocators. I’ve written a post on Cohost explaining memory allocators (which I should repost here soon.) I know how memory allocators work and even I can’t stomach the thought of writing another heap allocator.

This is a bit of a problem. Because we can’t use malloc, and we need to have separate memory for the VM and user programs, which means we need separate heaps, which means we need to write a heap allocator.

And I know what you’re thinking: just use one of the C memory allocators with permissive licenses that are freely available on Github! And I too had that thought. But going through all of them I noticed that many don’t have the features I need. For example, a lot of them just function as a drop-in replacement for malloc. That’s great if that’s what you need, but if you need multiple heaps that’s not going to help a lot!

The good ones are also complicated and design goal number 1 was “must be fast” so we need a good one. The good ones with different heap instances are even more complicated. Looking at integrating some of these gave me such an overwhelming feeling of ennui that I thought to myself “I would rather write my own heap allocator.” But I quickly came to my senses and realized that nobody wants to write a heap allocator, not even me, and even if I did there’s no guarantee it’d be fast anyway. I realized that mandating writing a heap allocator or integrating an off-the-shelf allocator was at odds with the design goal of the VM being easy to implement.

Plus while many of those allocators are great, what if you’re writing an implementation of the VM in C#? Or JavaScript? Or Python? Or Lua? Then you can’t use the off-the-shelf ones and still have to write your own. Don’t say it couldn’t happen, I have personally done much stranger things and I’m friends with many sickos who would also do things like this.

So we have to use malloc because it’s fast and eases implementation of the VM. Plus on higher level languages, instantiating an array (or table, yes Lua you can put your hand down now) is conceptually very similar to a call to malloc so things remain very consistent.

But we can’t use malloc… or can we?

I’ll handle it my way

Okay, so, theoretically we could implement this VM in something like C#, right? And a “pointer” would just be a reference to an array with some kind of offset. Could we apply that model to malloced memory? Well, of course we can! I mean, that’s basically what high level languages do, right? Allocate some memory and call it an array and provide a reference. So that’s what we have to do, too.

I decided to call these references “handles” because to my mind a handle is an opaque indirect reference to something that you can only really interact with through system or API calls. So a pointer becomes a handle which points to some allocated memory somewhere, plus an offset. Great! What system calls do we need for this system? As it turns out, it’s pretty simple. These are the memory management operations available to Bismuth’s IR:

- alloc size : allocate memory of size bytes and return a handle.

- free handle : free memory associated with handle.

- load handle offset : return the 32-bit value contained in the memory pointed at by handle starting at byte offset.

- stor handle offset value : store the 32-bit value into the memory pointed at by handle starting at byte offset.

I’m omitting load/store operations for different bit depths for brevity here.

So the plan is this:

- When a program wants to allocate memory, use

callocto allocate and zero out some memory, generate a unique value that represents the handle to that memory, and store this information in a hash table with the handle as the key and the pointer plus size as the value. The handle table is unique to each user program, so programs can’t snoop on each other’s memory. - If a program is done with memory, it can free it via the handle. This removes the key-value-pair from the handle table.

- When a program wants to load from or store to memory, do this via the handle by providing an offset. The VM looks up the pointer and size information, bounds checks the offset, and if it’s in bounds performs the load or store. We can also prevent dereferencing stale pointers because any freed pointers won’t be in the handle table.

This approach will work, though there are a couple downsides that make this more of a compromise than an ideal solution.

First, indirecting every memory access through a table is going to slow things down. I don’t know how much yet, but given there are languages where literally every member access is a hash table lookup, I think it’s going to be fine. There’s probably ways of caching things to improve matters.

Second, C-style pointers become more complicated. Instead of a single integer, it becomes a handle and offset struct. This is fine because I happen to know that the C standard says that pointers need not be integers, only that they can be converted to integers, and even that operation may be undefined depending on the implementation. The fact that pointers are usually simple integers is just a happy coincidence, not mandated by the standard. So you could still implement standard-compliant C on top of this implementation, unless I’m overlooking something. Plus, on a modern 64-bit system the pointer could have the handle value in the top 32 bits and the offset in the bottom 32 bits.

Generating handles

At this point I started happily implementing my VM’s memory management systems, when everything came to a screeching halt. How do I actually generate the unique handle values for the pointers?

My first idea was to just use the pointer itself as the value. That’s certainly the easiest, and guaranteed to be unique. Just one small problem: my OS is 64-bit and my VM is 32-bit. I can’t cram a 64-bit integer into a 32-bit integer, or take a 32-bit chunk of it and guarantee it’s unique. I could make every heap for every program a contiguous chunk of memory (webassembly does this) so that every pointer is always a 32-bit offset from the start of that memory, but then I couldn’t use malloc!

In the before-times there were architectures that had more memory than their system’s word size. For example, 16-bit machines with 24 bits of memory, or 8-bit machines with 16 bits of memory. I could keep my VM using 32-bit integers and just consider handles a 64-bit integer.

This would unfortunately be very messy. Making this change would create a separate class of incompatible values, with “normal” IR operations that expect 32-bit values unable to operate on handles. I’d have to create different versions of relevant operations that work exclusively with handles, and that would greatly increase the VM’s complexity. Suffice to say this would be incompatible with the “must be easy to implement” design goal. So that wasn’t a great fit either.

The simplest idea I had was to just use an incrementing 32-bit integer. Unfortunately that’s only guaranteed to be unique so long as the value doesn’t overflow, which it eventually would. This would still work though if whatever method doles out handle values checks the handle table to see if it contains that value, and if so continues to loop until it finds a handle that isn’t taken.

I didn’t like that for two reasons though:

- It’s quite possible for a lot of memory to be allocated at the start of a program that’s never deallocated. This means generating the handle value could stall on allocations for some amount of time if the handle value overflowed.

- Though I can’t quite put my finger on it, it just felt like having valid handles all be on the low end of a 32-bit integer was dangerously insecure.

So, like I always do, I decided to complicate things.

Linear feedback shift registers

I’m not going to explain everything about linear feedback shift registers here. Partly because I don’t have that kind of time, and partly because I don’t really understand how or why they work, just that they do and that they’re awesome. If you want a thorough explanation you can watch DavidXNewton’s video about them. The short version is that a linear feedback shift register is a simple bit-shifting algorithm that, when using the correct maximum length term, yields an ideal non-repeating series of pseudo-random numbers.

This means that a 4-bit LFSR with the right term will generate 15 numbers, from 1 through 15, in a random order, without repeating any value, until all values have been exhausted. The series of numbers will then loop and repeat. Transport Tycoon uses this for tile updates (see aforementioned DavidXNewton video) and Wolf3D and others use this technique to do fizzle fades.

They’re basically a shuffled list of integers up to N bits in a can, where “can” means “algorithm.” LFSRs are pretty nifty and I’m going to use them for generating handles. The code for them is also short enough that I can show you the entirety of my lfsr.h file right here:

#pragma once

#include <assert.h>

typedef struct lfsr16_t {

uint16_t value;

uint16_t term;

} lfsr16_t;

typedef struct lfsr32_t {

uint32_t value;

uint32_t term;

} lfsr32_t;

static inline uint16_t shift16(lfsr16_t* inst) {

assert(inst != NULL);

if (inst->value & 1) {

inst->value = (inst->value >> 1) ^ inst->term;

}

else {

inst->value = inst->value >> 1;

}

return inst->value;

}

static inline uint32_t shift32(lfsr32_t* inst) {

assert(inst != NULL);

if (inst->value & 1) {

inst->value = (inst->value >> 1) ^ inst->term;

}

else {

inst->value = inst->value >> 1;

}

return inst->value;

}

Feel free to use the above code snippet in your own projects if you like.

The fact that LFSRs never produce the number zero is also quite handy because it means we can just designate a handle of 0 as effectively the same as NULL. Neat!

Here’s how the VM generates new handles:

- Have a list of N maximum length LFSR terms.

- When a program starts, create an LFSR using a random term from this list and an initial value of 1. Store the term’s index in the list as the initial term as well.

- Whenever a handle value must be generated shift the LFSR to produce a number. If that number is 1 we know the LFSR has looped and should initialize a new LFSR with the next term from the terms list, wrapping back to index 0 if necessary. The initial term the program started with is always skipped in order to avoid stalls from long-lived early-program allocations. This means the initial sequence of handle values is only ever used once for the runtime of the program.

- We now have a pseudo-random handle value. Check if it already exists as a key in the handle table. If not, great! Return that handle. Otherwise, go back to step 3.

This may sound more complicated than it really is, so I’ll go through the relevant bits of code to show it’s not so bad. This is the function for initializing the handle manager for a user program:

static inline char* Handles_Init(HandleManager* handles) {

handles->InitialTerm = rand() & RANDOM_TERM_MASK;

handles->NextTerm = handles->InitialTerm;

Handles_NextLFSR(handles);

HandleMap_init(&handles->Table);

return NULL;

}

Select a random initial term index, set the next term index to the initial index, (the Handles_NextLFSR function will use, then advance it) initialize the LFSR, then the hash table. Next is the function which initializes the LFSR:

static inline void Handles_NextLFSR(HandleManager* handles) {

lfsr32_t lfsr = { 1, lfsrterms32[handles->NextTerm++] };

handles->LFSR = lfsr;

if (handles->NextTerm >= HANDLE_TERMS_COUNT) {

handles->NextTerm = 0;

}

if (handles->NextTerm == handles->InitialTerm) {

handles->NextTerm++;

if (handles->NextTerm >= HANDLE_TERMS_COUNT) {

handles->NextTerm = 0;

}

}

}

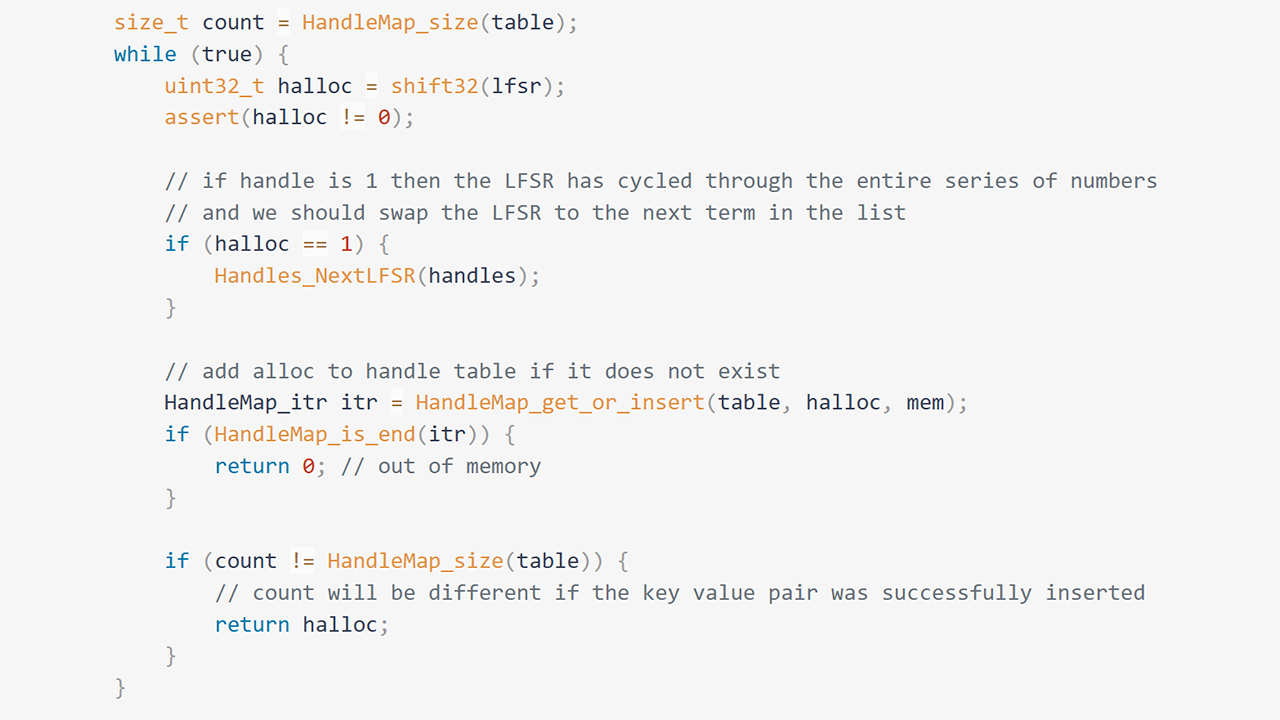

Pretty simple. Set the value to 1, and the term to whatever is in our terms list at the NextTerm index, then increment the next term index. If that index overflows, set it to 0. If the next term index is the initial term’s index, increment to skip it and check for overflow again. Finally here’s the snippet that generates the handle:

size_t count = HandleMap_size(table);

while (true) {

uint32_t halloc = shift32(lfsr);

assert(halloc != 0);

// if handle is 1 then the LFSR has cycled through the entire series of numbers

// and we should swap the LFSR to the next term in the list

if (halloc == 1) {

Handles_NextLFSR(handles);

}

// add alloc to handle table if it does not exist

HandleMap_itr itr = HandleMap_get_or_insert(table, halloc, mem);

if (HandleMap_is_end(itr)) {

return 0; // out of memory

}

if (count != HandleMap_size(table)) {

// count will be different if the key value pair was successfully inserted

return halloc;

}

}

Get the LFSR to spit out a new value, and if that value is 1 set up the next LFSR. 1 is still a valid handle value though, so we can still use it. Try to insert the handle into the handle table, returning the NULL handle to indicate failure if out of memory. If the handle was successfully inserted, return the handle value, otherwise try again.

I think this is a pretty robust process for generating unique and pseudo-random 32-bit handles to 64-bit pointers, and it avoids some obvious security pitfalls. There might be some I’m overlooking, but I can’t think of them. If you can think of anything I’ve missed, let me know in the comments.

Though this is the recommended way of doing it I probably won’t mandate in the VM’s specification that handle generation is implemented this way, in service of the “must be easy to implement” design goal. I’ll probably just leave it implementation defined. There’s loads of scenarios where a simple incrementing integer would probably be just fine.

So now we can allocate memory, access it via handles, and free it, and nobody ever needs to write their own stinkin’ memory allocator, fabulous! But astute readers may have noticed there’s a missing operation for handles.

Realloc

Later, when I was working on a language to sit on top of the IR and thinking about things like borrowing references, I realized an interesting property of this fancy handle indirection system. Using realloc in C has the annoying property that the pointer to your memory might wind up somewhere else because the previously allocated memory can’t be grown to the new size. In that case C allocates new memory of the appropriate size and copies the relevant sections of the old memory, and returns the new pointer.

But our VM uses handles, not pointers. The underlying pointer and size can change without having to change the value of the handle. This is great news for the final part of the memory management API:

- realloc handle size : resize the memory associated with handle to be size bytes and return either the previous value of handle, or a

NULLhandle on failure.

So reallocating memory in our VM will never mutate the handle. It either succeeds and the handle stays the same, or it fails and doesn’t affect the memory pointed at by the handle at all. I’m not sure how helpful this is truly going to be, but I think it’s a pretty neat side effect of the system. It’s like getting object references for free!

Conclusion

That concludes the memory management and safety design of the Bismuth VM. It’s not perfect, some compromises had to be made, but all in all I’m quite pleased. It hits all 3 of the relevant design goals and while it’s not as fast as one might like I think it’s fast enough. It’s compatible with the C standard. And importantly it keeps the VM much easier to implement, by not forcing anyone to deal with custom heap allocators.

I hope you’ve found this interesting, and that you’ll join me for the next post.